AI Interview Series #4: Transformers vs Mixture of Experts (MoE)

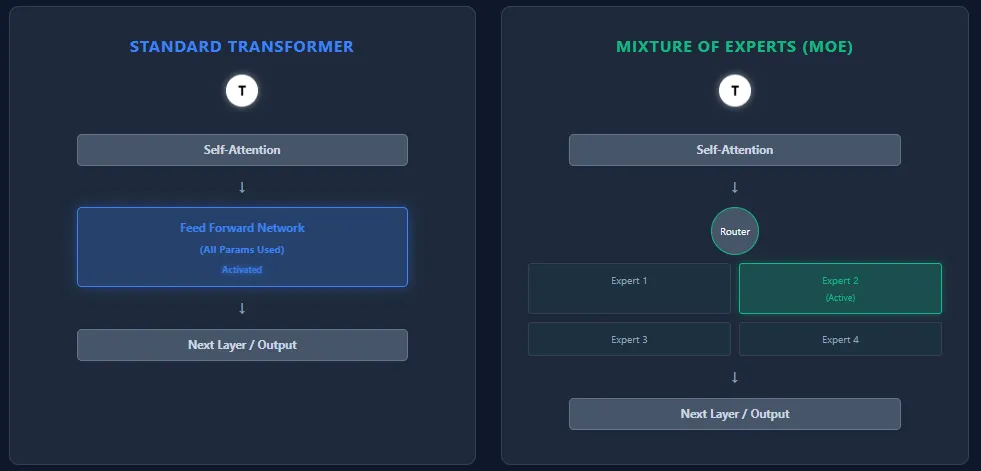

Question: MoE models contain far more parameters than Transformers, yet they can run faster at inference. How is that possible? Difference between Transformers & Mixture of Experts (MoE) Transformers and…